What is a Decision Model? A decision model is a formal procedure for determining which action to take. This might be a personal task, involve teams of people in a business, or even a national population.

Decision models are often, but not always, algorithmic in nature. Decision Theory is an academic rabbit hole all too easy to disappear into, with warrens tunnelling under the fields of neuroscience, philosophy, computer science, social engineering and many others. I’m interested in why decision models are important, now and in the unfolding future.

In a recent report by Thinkers50 and the European Centre for Strategic Innovation, they concluded (my emphasis): Rolling out AI enterprise-wide requires every organisation to develop a judgment protocol, a system that legitimises the exercising of judgment within a company across all levels — and one that will change the century-old “command and control” philosophy that many companies still use to make decisions.

Decision models are everywhere

Our lives are, of course, influenced by a variety of decision models.

Many aspects of our personal finance are governed by decision models. When your payment card is blocked while overseas on holiday, that’s down to an algorithmic decision model. The fact that the model didn’t spot the series of payments leading to the airport just shows how unsophisticated some decision models still are.

Loan and mortgage approvals pass through decision models too. An extreme application for them is in high-frequency trading on the stock market. What to buy/sell and at what price and volume. In venture capital, reportedly, when GV staff want approval to make a new investment, they defer to “The Machine”, an algorithmic decision model.

If you’ve seen or read Moneyball you’ll know how the Oakland Athletics baseball team benefited from a data-driven decision model suggesting which players to invest in.

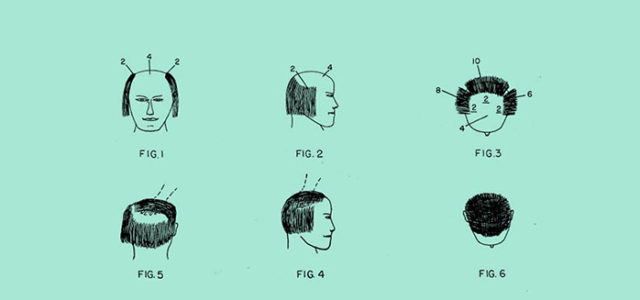

Decision models are everywhere. You’re probably using some personally on a regular basis. Even if you’re not consulting a mental version of the Eisenhower Matrix on an hourly basis, using the model to compare urgency vs importance, I bet you know someone who is. I know for a fact many of the people in my industry employ de Bono’s Six Thinking Hats almost religiously. Even flipping a coin or spinning a wheel of fortune are decision models.

If you’re at risk of analysis paralysis, I recommend having a look at NOBL’s decision reference tool, or The Decision Book, which may help you decide how to decide.

Why are they proliferating?

Decision models may be increasing in popularity for a number of reasons.

Research in the fields of neuroscience and behavioural economics continues to provide evidence that human beings are actually pretty poor at making decisions. We are irrational, as much as we might think we’re not. As Damásio says: We are feeling machines that think, not thinking machines that feel.

Also, we are prone to a set of biases. One might argue that it is impossible for a human to be truly objective about anything. Neither irrationality nor bias are conducive to good decision-making. Decision models can help.

Uniquely for our time, we are also “blessed” with vast quantities of data to inform our decision-making. While “information overload” has been a popular term for almost 50 years, Alvin Toffler couldn’t have foreseen in 1970 the utter vastness of the data-sphere we now inhabit. Increasingly sophisticated algorithms are, however, starting to make data-driven decision models more practical.

The issue of trust

Which leads me to the issue of trust. Some algorithmic decision models are biased too. It may be an over-simplification to say that humans transfer their biases into the algorithms, either naively during initial creation, or via exposure to the biases present in the huge human-created data sets they operate on. Who gets that loan, who is selected for interview, who gets a high score during assessment, who is granted parole, etc.

One problem with algorithmic decision models is they are often a Black Box. Totally opaque, the reasoning behind a given decision is closed and mysterious. It’s Black Box decision models that gave rise to the “Computer says no” catch phrase on sketch comedy Little Britain. It’s hard to trust a model where the decision-making process is totally hidden.

A transparent approach, a Glass Box, where we can see the inner workings of the model would seem more trustworthy. However, given the complexity of even simple algorithms, would we be able to understand what’s actually going on? Recent research by René Kizilcec at Stanford suggests that trying to make modern algorithmic models transparent is a fools errand. The transparency can even backfire, generating even more distrust.

Decision models neither totally transparent nor opaque appear to be the most trusted. We might call this sort of model a Translucent Box, such as The POP Method ® by Subsector. Judgement Protocols, if aimed at legitimising the exercise of judgment within a company at all levels, require simple translucent decision models for teams in order to maximise trust.

Article by channel:

Everything you need to know about Digital Transformation

The best articles, news and events direct to your inbox

Read more articles tagged: Business Model, Featured, Strategy