You no longer have the legitimate right to protest when someone uses your data. A long time ago it was accepted by you as part of the Internet social contract. That’s what takes to live in the digital age. You gave your data in exchange for an app, a personality test or even in return to download a report on the evolution of digitalization in Botswana.

Read original post in Spanish.

A. Property or Use?

Your data is no longer only yours. You gave it away and you received something in return. Now, I understand that your frustration does not come from the concept of property (in the digital age that property is already shared), I understand that your biggest frustration is derived from the use that other companies are doing on aggregated data built on behalf of your personal data.

In the new digital economy, we leave to the free will of a data scientist or a recently graduated mathematician the decision on whether the colour of my eyes is valid to calculate the premium of my car insurance renewal. We have let a predictive models expert decide if my height, where I live or my offspring has a direct relationship with my creditworthiness.

Actually, we live in the tyranny of correlations. Maths is the new gear that defines from the price of products and services granted by dynamic pricing models, to the justice applied when a grant is conceded. We are subsidized by a Monte Carlo model, game theory or Markov chains.

Since the first draft of the General Data Protection Regulation (GDPR) back in April 2016, I have been a fervent detractor of the consequences derived from the necessity of constant permission in the collection and processing of personal data.

I have helped hundreds of managers to understand the role of technology, data and people as levers of digital transformation both in the exploitation of actual business models and in the exploration of new ones.

Moreover, I have criticized privacy regulations for disrupting European competitive landscape in terms of data collection, process and optimization. In my opinion, the mistake was not in protecting property but in the use of personal data as part of inferences at an aggregate level.

B. Ruling the Algorithm: Towards the Data Expert Validator

I have changed my mind. Since The Guardian released the first article on Cambridge Analytica (The great British Brexit robbery: how our democracy was hijacked) back in May 2017, something has changed regarding data privacy worldwide.

Our data is no longer ours, the inferences and the decisions that are made from models in which our personal data is input, should have a certain level of scrutiny, analysis and audit.

Not a matter of justice, it is a matter of sensitivity towards a model in which it is possible to infer erroneous conclusions and derived responsibilities based on how these models were built. Predictive models quality and frequency supervision is and should be the focus of a certain level of privacy oversight. And I do speak of regulation as far as civil liability is concerned.

We live in an era in which data and technology have altered humanity. And yet, it is the people in my judgment who must remain responsible for the decisions made when evolutionary consequences are inferred.

The statement “not without my permission”, “not without my consent” may not have to refer to the use of my personal data. I have already given permission + consent by browsing on your website, downloading that report, doing that personality test or simply downloading an app to measure the decibels in a Justin Bieber concert.

Yes, I accept that you use my personal data, I gave it to you in return of something. It was a legitimate exchange. However, I never withdrew consent to use my personal data in a predictive model whose definition of constructs and variables ends up hurting me. I refuse my consent for that use. You have no longer my permission.

A new concept of corporate social responsibility emerges. And it is all about controlling, monitoring and validating the good use of corporate black box which handles large amounts of data. The new normal should be incorporating external validation into all predictive models and all algorithmic work carried out by large organizations in order to achieve transparency and accountability.

We all accept that we live in the 4th industrial revolution, an era in which data and technology change the way we draw progress. End users must have the right to act on the result of predictive models. That has not only to do with the right to rectification but also with transparency.

Those corporations willing to exercise transparency regarding the output of predictive models will generate trust. The war is not in the property. That has been the misleading goal of all privacy regulations. The battle is in the design, governance and rectification of algorithms. Otherwise, we could fall into the error of perpetuating biases and accepting decisions when an algorithm becomes a data set of a newly created algorithm.

This is an invitation for a collective work towards a higher degree of scrutiny regarding the design of predictive models and work on aggregated data. Which means creating the role of an independent supervisor for tasks such as data inputs, output models, design and definitions. The role of “IDEA” (Independent Data Expert Auditor) emerges.

A body of professionals who validate and guarantee that companies make a balanced and impartial use of predictive modelling. If we have been able to certify agile coaches, we can take care of the quality and the derivation of certain conclusions in terms of data. Otherwise, we will take the risk that such valuable work from data engineers and data scientists will only be validated by the most opportunistic executive criteria. And we already know the ending of that story. Growth or efficiency as unique indicators of success.

C. The tyranny of algorithms

Does it make any sense that an autonomous vehicle will prioritize occupants over pedestrians’ lives? Are we going to rely on an algorithm to solve the trolley problem (Wikipedia: trolley problem) when we already know that is a thought experiment in ethics?

If we let correlations and algorithms think, we take risk of rewarding masculine and white traits in searching for models of business success. An algorithm can not change historical series or the lack of diversity. Only humans can ask the right question when looking for new drifts in the “algorithm”.

If we looked for a predictive model of juvenile delinquency in America, we would conclude that being black, being part of unstructured family and living in low-income neighbourhoods would explain everything. Are we really creating predictive models or perpetuating biases? A predictive model will never find a solution for gender equality. It would only strengthen biases and intensify existing inequalities.

Only the creative intervention of humans can change the historical bias and introduce new hypotheses to validate. Humans can create a better world based on the use of data. A long time ago business schools included ethical reasoning to avoid such biases. However, there’ll always be a hidden space when the criterion of maximizing results is the unique performance metric for data scientists.

My friend Gam Dias illustrated me with this case regarding the exchange of food stamps in USA, as a perfect example of bias in algorithm when fighting fraudulent food stamp behaviour. USDA kicked out small business out food stamp program under the assumption of fraud. Consecutive multiple exchanges in short periods of time caused by the need to share transportation to food stores given the distance from their homes and the lack of private vehicles among food stamps holders were detected as fraud. As a consequence, some stores were permanently banned from the food stamp program. It is clear that someone stopped monitoring the model and reality became a bias. Damn reality when doesn’t fit my model!

The idea of designing a framework that enhances transparency by establishing certain rules of governance that allows users to “react” on the result of aggregated data outputs led by corporations, sets up a new fair pitch in which companies are accountable for the aggregate use of personal data.

Are we talking about Corporate Social Responsibility in the use of data? Maybe. My view is that we should talk about sustainability in the 4th industrial revolution. It is not about transforming black data boxes into transparent data boxes, but into responsible data boxes. Differentiation via data as a source of competitive advantage is a reality, and my proposal is to advocate for a reality in which organizations should be liable for the implications derived from the use of data on an aggregate level.

A few days ago Mark Zuckerberg acknowledged on a Facebook post (A Privacy-Focused Vision for Social Networking – March 6, 2019) that his company did not have a good reputation in the construction of privacy-based services. On the same post he admitted that Facebook new focus would be a range of new services safeguarding privacy:

“I understand that many people don’t think Facebook can or would even want to build this kind of privacy-focused platform — because frankly we don’t currently have a strong reputation for building privacy protective services, and we’ve historically focused on tools for more open sharing. But we’ve repeatedly shown that we can evolve to build the services that people really want, including in private messaging and stories.” (…)

“We plan to build this the way we’ve developed WhatsApp: focus on the most fundamental and private use case — messaging — make it as secure as possible, and then build more ways for people to interact on top of that, including calls, video chats, groups, stories, businesses, payments, commerce, and ultimately a platform for many other kinds of private services.”

Promise made! Facebook’s disrupting digital payments and e-commerce. For the sake of what? Are we willing to alter US presidential elections or Brexit referendum again without supervising the use of the data by any tech company?

It is time to open the doors and allow a third party to control value obtained from personal data. And that is, in my opinion, the new approach to privacy regulation (not property). We already gave data ownership away long time ago. I sincerely hope that this is no more than an “idea” but the proliferation of “IDEAs” (Auditor of Independent Data Experts).

Whether we like it or not regulation is an imperfect substitute for accountability and trust. After Trump and Brexit, I am afraid that there are many of us who distrust those to whom we give our data in exchange for what we consider valuable at that moment. it is time to monitor the use of aggregated data and stop having empty debates about property.

“I’m not upset that you lied to me, I’m upset that from now on I can’t believe you.” ― attr. to Friedrich Nietzsche

“Nicht daß du mich belogst, sondern daß ich dir nicht mehr glaube, hat mich erschüttert.”

Sources:

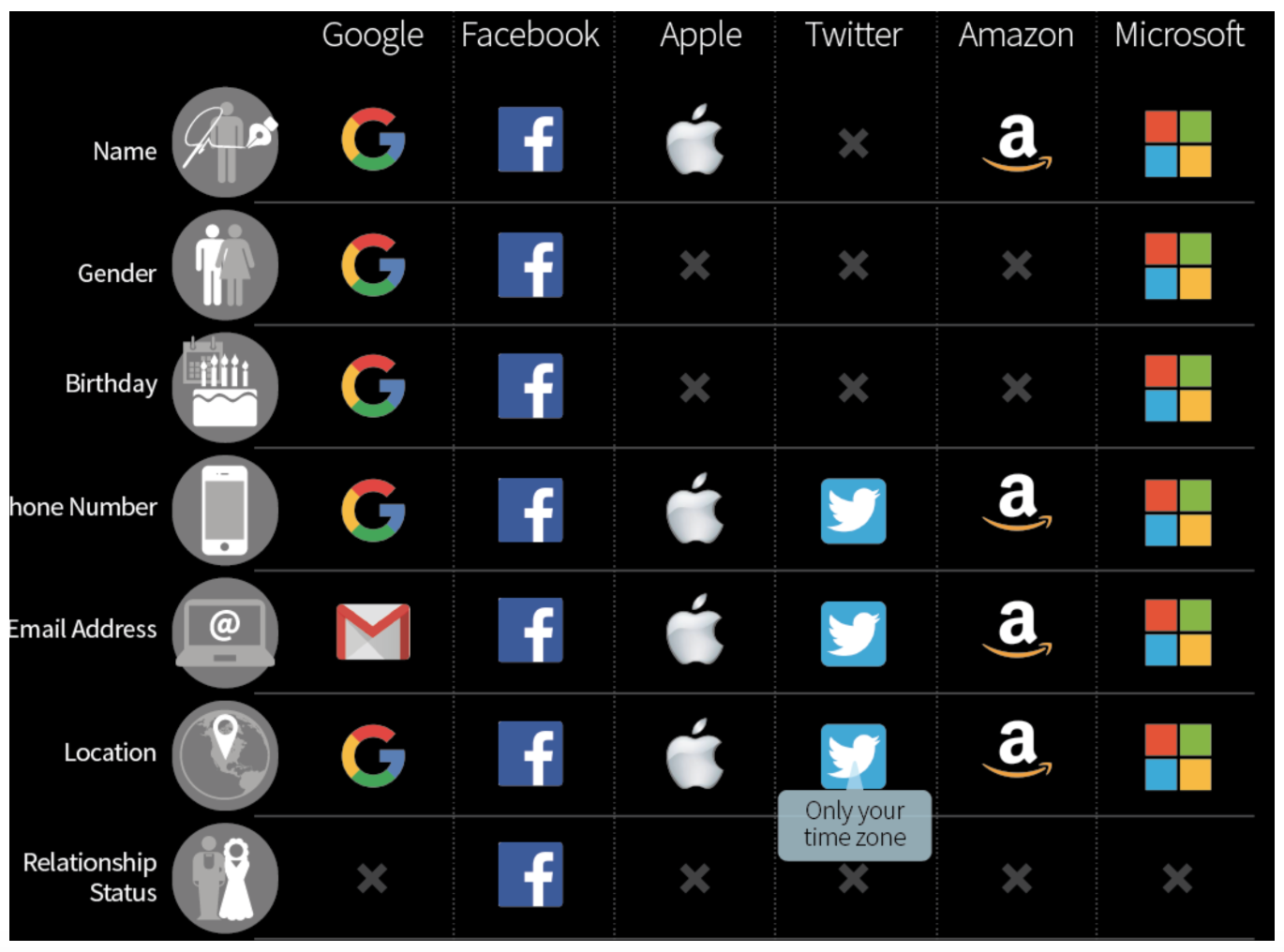

[1] What Apple, Amazon, Google, Facebook, Microsoft and Twitter Know About You (Infographic) https://www.digitalinformationworld.com/2018/12/here-is-what-the-big-tech-companies-know-about-you.html#postimages-1

[2] The Guardian: The great British Brexit robbery: how our democracy was hijacked by Carol Cadwalladr https://www.theguardian.com/technology/2017/may/07/the-great-british-brexit-robbery-hijacked-democracy

[3] Wikipedia: Trolley Problem https://en.wikipedia.org/wiki/Trolley_problem

[4] How an algorithm kicks small businesses out of the food stamps program on dubious fraud charges https://newfoodeconomy.org/usda-algorithm-food-stamp-snap-fraud-small-businesses/

[5] A Privacy-Focused Vision for Social Networking – 6 marzo 2019 https://www.facebook.com/notes/mark-zuckerberg/a-privacy-focused-vision-for-social-networking/10156700570096634/

Article by channel:

Everything you need to know about Digital Transformation

The best articles, news and events direct to your inbox

Read more articles tagged: Cyber Security, Featured, GDPR