Perceptual Mapping has been used in Marketing Research for more than 40 years and is now seen as standard practice by many marketing researchers.

Once upon a time, however, it was cutting edge and there was a lot of hype about it. Some proponents touted it as The Answer to just about any marketing challenge as well as The Way to white space – unexploited opportunities.

The hype subsided long ago and, if anything, now it may not be taken seriously enough. The fundamental ideas are tried & true, but Perceptual Mapping is sometimes conducted sloppily – almost as an afterthought – or seen as push-button analytics. A predicable consequence is that its quality and value have diminished in recent years.

Why do we do it?

Known by various names – often just “mapping” – Brand and User maps are the most common applications. It is also sometimes used to summarize segments derived from cluster analysis.

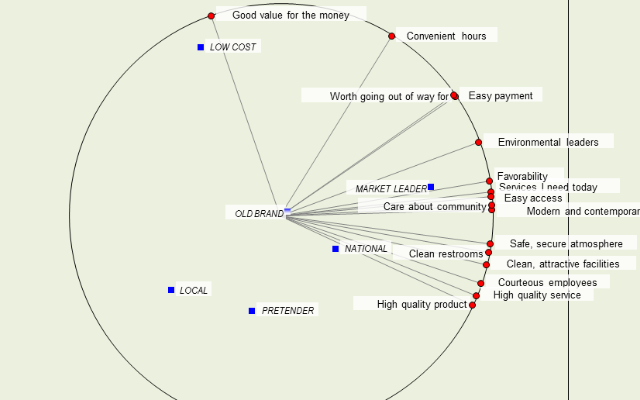

First, though, what do we mean by map? As shown in the MDPREF map above, Perceptual Maps are scatterplots which show the how brands (or users of brands) are perceived by consumers. The maps are developed by one of several statistical methods I’ll touch on in a bit.

Perceptual Mapping can provide very useful insights into how consumers see a category. Particularly when combined with key driver analysis, it gives us clues as to the features of brands that most influence consumer choices. It can very useful in spotting potential gaps in the market and providing direction for new product development.

Perceptual Mapping gives us an idea of how well our marketing is working and if it is achieving what we’d hoped. It cannot replace sales or customer data but it is a useful supplement to them. It also gives us a sense of the efficacy of competitors’ marketing efforts.

Sometimes the difference between how we (or our clients) perceive a category and how consumers perceive it is painfully obvious. An astute client of mine once conducted Perceptual Mapping using his salesforce as respondents and then later showed them their map alongside one developed from consumer data. Ouch!

What kind of data do I need?

Data are typically collected via “pick-any” grids, also known as association matrices, or by rating scales. When pick-any grids are used, respondents indicate the attributes they think apply to a selection of brands they know or, in the case of rating scales, how strongly they associate each of the attributes with these brands. Either procedure can be easily modified to gather perceptions of users of brands.

The chief advantage of pick-any grids is that they are fast and easy for respondents to complete. The trade-off for this is less discrimination. Rating scales provide finer-grained data, but at the cost of respondent fatigue and possibly lower data quality when respondents are asked to rate many brands on a large number of attributes.

Occasionally, we show respondents pairs of brands and ask them to rate how similar the brands are, overall or with respect to specific attributes. The latter, in particular, is very tough on respondents. My experience may or may not be typical but I rarely see either being done anymore. Sorting exercises are also used but perhaps not as commonly as they should be.

Mapping is frequently part of brand tracking studies. Often the first wave is used as the benchmark and, in subsequent waves, brands are plotted “passively” in the perceptual space developed from the benchmark data. Depending on the frequency of the research and maturity of the product category, the map may be re-benchmarked every 1-3 years.

Social media data or data gathered by various implicit measurement techniques are used by some researchers jointly with – or in lieu of – conventional consumer survey data. I have also run maps based on POS scanner data and in sensory testing studies.

What method should I use?

You actually have many methods to choose from, each with its advantages and disadvantages. Some methods employ aggregate data, e.g., a simple matrix of attribute scores (rows) by brands or users (columns).

Others require raw (consumer-level) data. The first is simple – perhaps too simple since, by using averages, we are discarding information, with the result being that these maps will tend to exaggerate the distinctions between brands and their associations with the attributes.

Listed below are some mapping methods I have used at one point or another in my career.

Methods for aggregate (and semi-aggregate) data:

Methods for consumer-level data:

How do I read a map?

Methods based on consumer-level data require a bit more time and statistical expertise to construct and interpret, but this trade-off may be sensible in many circumstances. One benefit is that, with modern psychometric tools, it is possible to adjust for response-styles.

At the other extreme, simple line graphs (“snake charts”) or “radar charts” are sometimes used in place of mapping, but the plots can be hard to read and do not reveal statistically how the brands (or users) and attributes correlate with one another.

A compromise solution is to use Principal Components or Factor Analysis to reduce the attributes to their key underlying dimensions and then plot the brands’ dimension scores with snake charts or radar charts.

Some maps, such as those produced by Simple Correspondence Analysis, Biplots and MDPREF, automatically plot both brands and attributes. Multiple Correspondence Analysis, Factor Analysis, Principal Components Analysis and Discriminant Analysis maps can include attributes but some modelers elect to plot only the brand scores on each dimension in the scatterplot. Maps produced by Multidimensional Scaling and Hierarchical Cluster Analysis only show the brands, which is a disadvantage in my opinion.

Plotting algorithms for many routines – some of which are 3D – are programmed to fill out the available space and, unfortunately, I’ve seen clients use rulers to help them interpret maps. This is a bad idea and the maps should be interpreted directionally rather than absolutely.

The distances on a map should not be taken literally! In most situations, I favor a conservative “neighborhood” interpretation – brands and attributes in the same area of the map are more strongly (positively) correlated with one another than brands and attributes located elsewhere on the map. That’s a good place to start, at least.

If a map doesn’t make sense to you, try to figure out why. Just because something is produced by a computer doesn’t mean it’s right…there may be data errors or the modeling might have been careless. Of course, your expectations may be the source of the “error”…if we knew the answer beforehand we wouldn’t bother with mapping!

One of the strong points of Correspondence Analysis is that it automatically adjusts for brand size, so big brands are less likely to dominate the map and the relative profiles of the brands is more evident.

A new brand with a similar image profile as the market leader may be a potential threat to that brand compared to other small brands. With a little effort, most other methods can also be coaxed to provide relative rather than absolute strengths.

However, these maps can be confusing and are easily misinterpreted – I recall a comment made at a conference with respect to Correspondence Analysis: “A picture is worth a thousand words. Unless it takes a thousand words to explain the picture.”

Again, don’t interpret the maps too literally.

A few tips

Rather than attempting to summarize what I see as the pros and cons of each mapping method – which would require many pages – let me instead give you some practical guidelines I use myself.

- First and foremost, take mapping seriously and don’t do it mechanically. It can be a very useful tool but, like most analytics, can be misleading if we get careless. Don’t just go with Simple Correspondence Analysis and the default options of your software. As noted, you have many options and they don’t all tell the same story, and the differences in their stories may make a difference to the client.

- Execution is King. Asking respondents to rate attributes that have little relevance to their purchase decisions – only to you or your client – is asking for trouble. Also, putting these questions late in the questionnaire after respondents have been heavily prompted (and are fatigued!) is bad practice. Using a poor-quality respondent panel also won’t pay off.

- Don’t get too hung up on data types. Some methods, at least in their classical form, require metric (numeric) data but, fortunately, most statistical methods are quite robust to violations of mathematical assumptions. If you use Factor Analysis with 0/1 data taken from an association matrix, the world is unlikely to come to a sudden end. That said, if your maps look odd and don’t make sense it could be because of a mismatch between data type and method. Use your judgement and check with your Marketing Science person.

- If you don’t have much time or budget, run Simple Correspondence Analysis and Biplots, and contrast the two. They are both “right” mathematically but usually provide quite different perspectives of the market you are analyzing, and one or the other may be more useful to your client. Don’t do this mindlessly, though, and look into other options beside the default settings.

- Don’t run map after map until you find one you think your client (or boss) will like! History doesn’t always repeat and it’s very easy to unknowingly capitalize on chance.

- Principal Components Analysis is the most straightforward of the methods that use consumer-level data. Use Discriminant Analysis when you wish to focus on the differences between brands rather than the total variance in the data. Newer and sophisticated methods such as Mixture Modeling can combine driver analysis, consumer segmentation and mapping but require special expertise to conduct and interpret.

Further reading

I don’t know of any textbooks devoted specifically to Perceptual Mapping but the statistical details of some of the main methods are covered in depth in the following books:

- Principal Component Analysis (Jolliffe)

- Foundations of Factor Analysis (Mulaik)

- Correspondence Analysis (Beh and Lombardo)

- Discriminant Analysis and Statistical Pattern Recognition (McLachlan)

- Multiple Factor Analysis by Example Using R (Pagès)

- Finite Mixture and Markov Switching Models (Frühwirth-Schnatter)

The documentation of the PC-MDS software by Professor Scott M. Smith offered concise technical descriptions of many of the popular mapping routines but his software is apparently no longer on the market. If you find an old copy of the manual lying around your office, keep it!

The documentation of the popular BrandMap software is excellent and more accessible to non-statisticians. BrandMap’s website also shows several different kinds of maps and may be of interest to readers new to mapping.

To sum up…

Though Perceptual Mapping never lived up to the ballyhoo of its youth, it has matured into one of the most useful tools in the Marketing Science toolkit. A lot of “hard” data are pretty sterile and mapping can bring us closer to consumers. It thrives in the space between qualitative and quantitative research and synergizes well with the new data sources at our fingertips, some of which can now be used in mapping.

I hope you’ve found this interesting and helpful!

Article by channel:

Everything you need to know about Digital Transformation

The best articles, news and events direct to your inbox

Read more articles tagged: Featured, Marketing Analytics