This question about competency frameworks, psychometric tests and 360° surveys etc. is regularly posed in various forms on LinkedIn analytics groups, and inevitably generates a lot of debate. I’d like to discuss it here in the context of the phrase ‘real-world’ via a case-study.

The term “real world” reflects the perception of many senior and operational managers that while competency frameworks and psychometric tests may be effective in the controlled academic environments in which they are created and developed, they may be less effective in their ‘real-world’ organisations. Could there be any truth to their concerns?

Analysts and businesspeople define “effective” differently

To answer this, we need to delve more deeply into the meaning of the term “effective” in this context. Without getting overly academic, analytics practitioners usually consider a framework to be “effective” if it has sufficiently high construct validity in that it accurately measures the construct it claims to measure such as competency, ability, or personality say. Now contrast this with operational managers for whom the term “effective” usually refers to the predictive (or concurrent) validity of the measure; that is, the extent to which organisational investments in employee frameworks predict desirable employee outcomes such as job performance, retention and ultimately profitability.

And herein lies the rub: just because a framework accurately measures the construct it purports to measure (e.g. competency or ability), this construct validity does not mean that the instrument predicts important employee outcomes. It will therefore not necessarily deliver metrics that can be used as useful inputs to create talent programmes specifically designed to maximise performance and retention of high potentials.

How to establish the validity of a framework

So how do you establish the validity of a competency framework or 360° survey etc.?

- You first test concurrent validity on a representative sample of your employees to determine which competencies correlate with desired employee outcomes in the ‘real world’ i.e. in your organisation (as opposed to wherever the vendor claims to have tested it).

- You then refine the framework based on what you learn from these correlations.

- If the potential cost of framework failure is particularly high, you deploy a methodology to test causality/predictive validity.

A case study

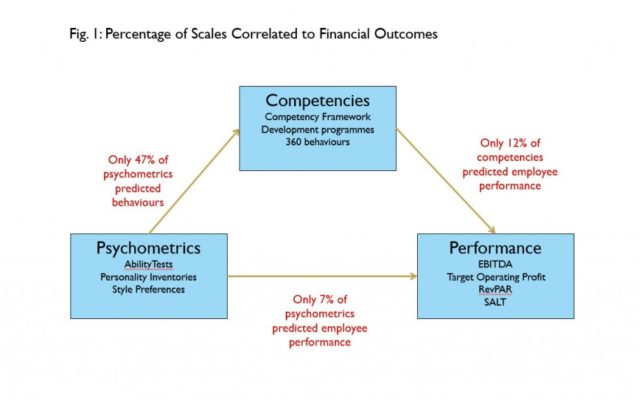

Sadly, few organisations do such testing before procuring frameworks which can lead to unfortunate results. As an illustration of this, we recently examined trait and learnable competencies data for 250 management employees in the same role at a well-known global blue chip brand, together with their job performance scores (Fig 1).

We modelled them as:

We modelled them as:

- Psychometric scores: Fixed characteristics unlikely to change over time and therefore potentially useful for selection if they correlated with performance

- Competencies: Learnable behaviours as assessed by the company’s competency framework, 360° survey and development programme assessors. Useful for developing high performance.

- Employee Performance: We were fortunate to obtain financial performance data for each manager which means these scales were reasonably objective. Ultimately, the reason for investing in psychometrics and competencies for selection and development is to achieve high performance here.

We found the following relationships in the data:

- Psychometric and competency scores: As can be seen, only 47% of psychometric test scales correlated with learnable competencies. Effect sizes were small, typically less than 0.20 meaning that they would not be helpful for selecting job candidates likely to

exhibit the competencies valued by the organisation. One psychometric test, however, did have a large correlation with performance; unfortunately this correlation was negative meaning that the higher the job candidates’ test scores, the lower their competency scores – hardly what the company intended when it bought this test. - Psychometric scores and Employee Performance: Only 7% of the psychometric test scales correlated with employee performance and again effect sizes were typically less than 0.20 – meaning that the psychometric tests were not useful for selection. Again, one test shared a negative relationship with performance: this means that if high scores were used for candidate selection, it is likely to select low performers.

- Competencies and Employee Performance: Only 12% of the learnable competency scales correlated with performance – and again, effect sizes were small meaning that investing in these competencies to drive development programmes was not worthwhile. And once more, some of these competencies had negative correlations with performance, meaning that developing them might actually decrease employee performance.

Conclusion

So, to go back to the original question: Do competency frameworks and psychometrics work in real-world organisations? One could hardly blame managers in the above organisation for being somewhat sceptical. This scenario is not unusual and is typical of the results we find when auditing the effectiveness of companies’ investments into competency frameworks and other employee performance measures.

But does it have to be this way? I believe that the answer to this is no, and that frameworks can not only work, but can significantly improve performance in the ‘real world’.

All that is needed is some upfront analysis to obtain data such as I have outlined above – as that is what is needed to determine whether performance is being properly measured as well as which scales are and are not useful (so that poor scales can be removed and if necessary replaced with better predictors/correlates). Typically, we see employee performance improvements of 20% to 40% by simply following this process.

So the answer to the original question is ultimately a qualified “yes” – competency and employee frameworks can work provided that systematic rigour is used in selecting instruments for ‘real world’ applications.

How to get value from competency frameworks and psychometric tests

- Frameworks cannot predict high performance if you don’t know what good looks like. The first step in procuring frameworks is therefore to ensure that “high performance” is clearly defined and measurable in relation to your roles. And if you want to appeal to your customers – operational managers – use operational performance outcomes.

- Before investing in a framework that will potentially have a negative effect on your workforces’ performance, ensure you get an independent analytics firm’s objective evaluation of each scale’s concurrent and/or predictive capabilities in respect of employee populations similar to your own. If the vendor you’re considering purchasing from cannot provide independent evidence, request a discount or get expert advice of your own before purchasing anything.

- If your workforces are larger than around 250 employees, you should certainly get expert advice to ensure that a proposed framework does indeed correlate with or predict performance in your own organisation before making a purchase.

- If you need professional help interpreting quantitative evidence provided by vendors, get it from an independent analyst – never from a statistician working for the vendor trying to sell you the framework.

Article by channel:

Everything you need to know about Digital Transformation

The best articles, news and events direct to your inbox

Read more articles tagged: Featured